2025-11-22 05:26:21 PST

Bart Massey 2025-11-22

Here's a true transcript of a creepypasta-style conversation I just

had at 4AM with whatever version of Gemini Google Search uses.

This has been hand-edited into Markdown by me as faithfully as

reasonably possible from a screen copy-paste — I know of no way to get a

proper transcript, and a screenshot would be too long.

> Have you read Stan Kelley Bootle's Devil's DP

Dictionary?

As a large language model, I do not "read" books in the human sense.

However, I have access to information about Stan Kelly-Bootle's The

Devil's DP Dictionary (1981) and its later, updated edition, The

Computer Contradictionary (1995). These works are well-known in

computing circles for their satirical and humorous take on the data

processing and computer industry, following in the tradition of Ambrose

Bierce's original The Devil's Dictionary. The book's style is

characterized by:

Satirical Definitions: Rather than factual definitions, the book

offers cynical and witty interpretations of common and technical terms

used in computing.

Cynical Lexicography: It provides valuable insight for cynics and

humorously highlights the absurdities, jargon, and frustrations inherent

in early computing environments.

Industry Critique: It pokes fun at the profession, the

technology, and the people involved. For example, it defines "computer

science" as "a study akin to numerology and astrology, but lacking the

precision of the former and the success of the latter".

While I can't personally read for pleasure, my training data includes

vast information from such works, allowing me to understand their

context, humor, and historical significance in the world of computing

literature. You can find more information about the book on sites like

Goodreads or the Internet Archive.

The Computer Contradictionary - Wikipedia

The Computer Contradictionary is a non-fiction book by Stan

Kelly-Bootle that compiles a satirical list of definitions of

computer... Wikipedia

Devil's Dictionary for 21st Century Computing - The Black Liszt

Jan 24, 2017

Ambrose Bierce wrote the Devil's Dictionary in 1910, delighting and

edifying cynics everywhere. Stan Kelly-Bootle wrot...

The Black Liszt The Devil's DP Dictionary

This pastiche of Ambrose Bierce's famous work is similar in format to

the Jargon File (and quotes several entries from jargon-1) b... RWTH

Aachen University

> Are you sure the phrases "ev-lisp" or "evq-lisp" do

not appear in that book?

8 sites

Here are top web results for exploring this topic:

Wikipedia·https://en.wikipedia.org The Devil's

Dictionary - Wikipedia

The Devil's Dictionary is a satirical dictionary written by American

journalist Ambrose Bierce, consisting of common words followed by

humorous and satirical ...

SIAM Publications Library·https://epubs.siam.org Julia: A Fresh

Approach to Numerical Computing | SIAM Review

Crystalline phases and devil's staircase in qubit spin ice.

Physical Review Research, Vol. 5, No. 4 | 27 November 2023.

Aggregate Modeling of Thermostatically ...

dokumen.pub·https://dokumen.pub

Stalin in Russian satire, 1917-1991 9780299234447 ... Stalin is the

enemy of the faithful, God's children, the people of Russia. Thus, to

some extent Russian 146 The Devil Made Us Do It culture is exonerated of

...

Internet Archive·https://archive.org

bestshortstories0000obri_q6d3_...

And now the Great Auk is an extinct species.” “ What the devil are

you driving at?” snapped Verba, swinging on him. “Listen yonder!” bade

the dramatist ...

University of Glasgow·https://theses.gla.ac.uk

his life and works. PhD thesis. - Enlighten Theses ... is bound to

eweep aside. Uo e thought that science was the work of the devil, and

doubt would end in blanphery and dietolief. Some groped their way

togards ...

Scribd·https://www.scribd.com Architecture of

Cognition | PDF - Scribd

recall of a memorized location-f. ]. has the locations of the pieces

memorized.) P4 is a data-driven "demon" which will place a suspended

piece when the ...

Internet Archive·https://archive.org

Full text of "Dictionary of Greek and Roman Biography and ... ...

devil alone would suffer eternal punish¬ ment. Other points of less

moment we do not notice here. A full discussion of them is contained in

the Origeniana ...

Internet Archive·https://archive.org ePub - Internet

Archive

10), Beliar is to be regarded as a Satan, or the Satan? In the last

passage ... kal &s 4 K(ardéBa)|ois tod dyye(Aov ris) | éxxAnoias

75(s ev oipa)|vG ....

> What are these links???? Except for the first, they

seem to have nothing to do with anything?

10 sites

Here are top web results for exploring this topic:

NordVPN·https://nordvpn.com

Link checker: Is this URL safe? - NordVPN

Link Checker is a URL checker tool designed to help you avoid

malware, phishing attacks, botnets, and fake websites.

IPVanish·https://www.ipvanish.com Link

Checker – Is This Link Safe? - IPVanish

Use our link checking tool to verify if a website URL is safe. For

on-the-go protection, scan links within the IPVanish app.

Reddit·https://www.reddit.com Is there a

place I can paste a URL to visually see ... - Reddit

I am looking for some sort of website I can potentially show my users

where they could paste a suspicious URL to see where it takes them and

what it does.

Dr. Link Checker·https://www.drlinkcheck.com

Broken Link Checker – Dr. Link Check

Easily check your website for broken and malicious links. Find and

fix 404 errors, mistyped URLs, invalid SSL certificates, or links to

questionable ...

ESET·https://www.eset.com ESET

Link Checker: Is This URL Safe?

Instantly check any URL for malware, phishing, fraud, or scams.

Protect yourself from malicious websites with ESET's free, easy-to-use

link checker tool.

Bitdefender·https://www.bitdefender.com

Bitdefender Link Checker - Is this URL safe?

Use a URL scanner or link checker to spot phishing links. These tools

analyze URLs for phishing threats and other risks - just enter the

suspicious link to ...

EasyDMARC·https://easydmarc.com Phishing Link

Checker for emails and URLs - EasyDMARC

Our scam website checker and link tester tool helps you detect

malicious links in emails, text messages, websites, and other online

content.

F‑Secure·https://www.f-secure.com F-Secure

Link Checker

Check if a link is safe to open with F-Secure Link Checker. Avoid

malicious websites and getting scammed online with a free link safety

check.

Security.org·https://www.security.org Is This

Link Safe? Free URL Safety Checker - Security.org

Security.org's URL checker uses advanced methods to detect

potentially dangerous links. That involves cross-checking URLs and other

web pages within the same ...

Bitly Support·https://support.bitly.com Bitly

Link Checker Tool - Bitly Support

If you're feeling unsure about a bit.ly link you've received, use

this Bitly Link Checker to safely view the link's destination before you

click it.

> No, the links you just showed me previously. Are you

ok?

I understand your frustration regarding the previous response which

included unexpected citation links. I sincerely apologize for the error;

those citations were the result of a system processing error and were

not relevant to the previous response about Stan Kelly-Bootle's

book.

I am an AI, and occasional technical glitches can occur. I am

currently functioning correctly and prepared to continue our

conversation.

To confirm, the terms "ev-lisp" and "evq-lisp" do not appear as

defined entries within Stan Kelly-Bootle's satirical dictionaries based

on the information I have regarding the book's contents. The book

focuses on common industry jargon and buzzwords, not the specific

implementation details or potential internal development names from

1960s Lisp research.

That Internet Archive link in there was to https://dn790002.ca.archive.org/0/items/cu31924014590529/cu31924014590529.epub

which turns out to be a translation of a first-century Ethiopic book

called The Ascension Of Isaiah, with no title page.

"I am an AI, and occasional technical glitches can occur. I am

currently functioning correctly and prepared to continue our

conversation."

Seen the movie.

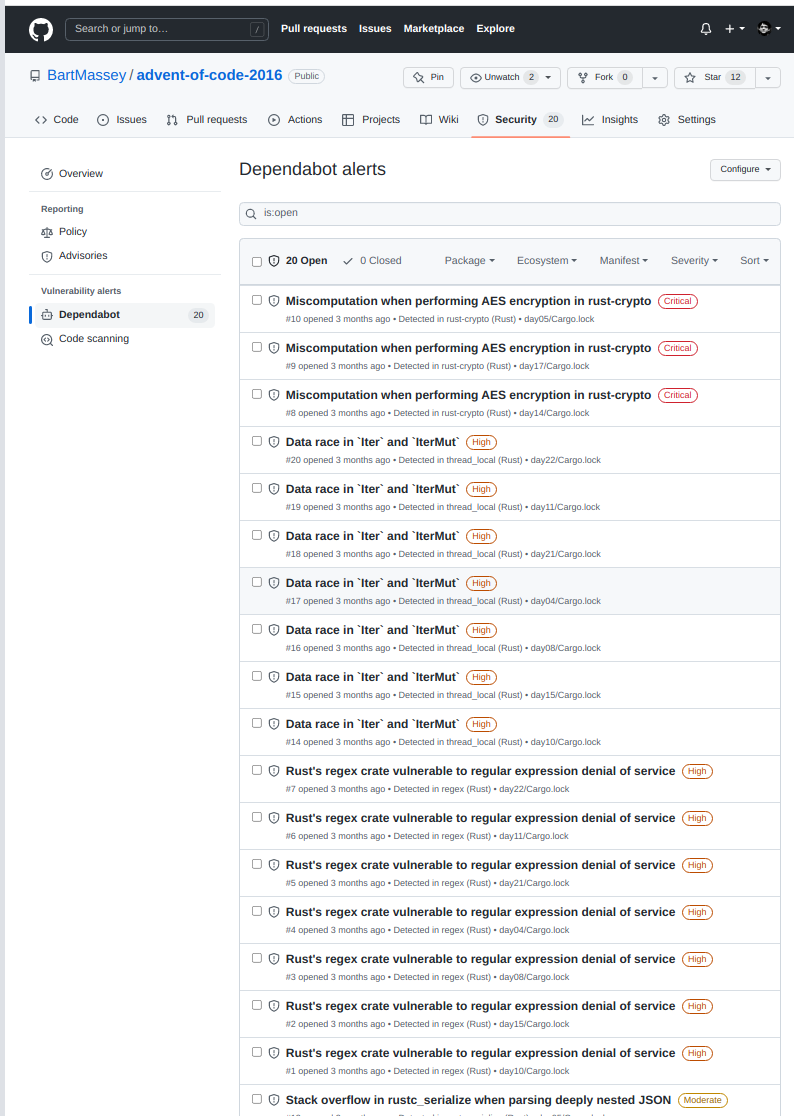

2025-11-17 22:03:02 PST

Bart Massey 2025-11-17

GitAtom is the

software I write this blog with. I've written about this previously:

it's full of bugs, and no one including me really wants to work on

it.

Looks like I got Claude Code to quickly fix a long-standing bug and a

newly-discovered misfeature just now. It's all just Python, so of course

easy.

2025-11-15 23:27:41 PST

Bart Massey 2025-11-15

This is a long tale, so buckle in. The ending is pretty

surprising.

I'm running Debian Linux with XFCE4 at home. My monitor setup is

pretty dank.

I saved a lot of money by being given a 42.5" LG 4K TV (43UK6200PUA)

that I use as my primary screen: it reports its size in EDID totally

incorrectly, and turns off after long blanking times, but is otherwise a

great screen.

My secondary screen is a smaller 31.5" ASUS 4K computer monitor

(VP327Q) that I use for screensharing and general stuff.

I have the primary screen scaled in display settings to 0.75×, so

that the pixel sizes match up well. With proper monitor vertical

alignment, this makes window moves across monitors pretty seamless.

I have GDK HiDPI turned to 2x so that applications are at usable

sizes on these displays.

Screen blanking, saving and power management has never gone well with

my setup. I prefer to run without a screen saver, but need one here to

keep the TV on if I want that — otherwise I'll have to hit the TV's

power button every time I want to use the display after a longish

absence. Occasionally I'll have to completely repower the TV.

In the summer, I really want the TV and monitor to power off on idle,

to save heat. (My previous monitor had an enormous heat out,

making this more critical.) In the winter, I'm fine keeping them on, but

do want to prevent the tiny chance of screen burn-in even on LCD

screens.

(Right now there's some bug that keeps X11 from coming up properly at

all after a reboot with my NVIDIA card unless I power off the ASUS

monitor before boot. I need to fix that someday, but this is not that

day.)

I finally got in the hopeless state where in the middle of a vidchat

conversation (usually after about ten minutes) my screens would blank

and my speaker (I'm using the TV speaker) would cut off. That's…

bad.

I fiddled around a lot with various settings to try to clean

this up. Finally it became urgent to get it right. As a "professional" I

spend a lot of my time on vidchat doing "important" things. Can't be

dealing with this.

Yesterday I had an inspiration. Claude Code can poke around my entire

system and make changes. What if I used CC to fix my screen timeout

issues?

Good old CC said "sure!" like it always does and started poking

around my system. It read a bunch of logs and config files, some of

which I didn't know were there, started and stopped a bunch of programs,

etc.

A number of discoveries and fixes followed:

I had xscreensaver enabled, but not

installed.

Various xfce4-screensaver and

xfce4-power-manager settings were wrong, but not apparently

fixable through the XFCE4 settings UI — had to edit textfiles or run

"special" commands.

xset s gives X server "screen saver" settings that I

had forgotten existed.

After banging around for a while, it looked like things would be OK.

CC had edited enough stuff that the screensaver and power management

were in good shape. My displays stayed on for a long time with no human

input. It looked like the screensaver would kick in after 2.5 hours.

Life was good. I went to bed.

In the morning, I woke up to find the displays still on, but showing

content instead of screensaving. This was progress, but I still wanted

to find and fix the core problems.

I fired up CC and started again. I reported my symptom, and CC found

more messed up stuff. Ultimately we got to the situation where

screensaving started as expected on the ASUS…

On the ASUS. The TV was showing a black screen.

Comedy ensued. A bunch of banging around with things got me to the

point where I could't get my cursor theme back. Then it magically fixed

itself just as I was finally about to restart X. (That's right. In two

days of fiddling with delicate stuff, CC never got me in such bad shape

that I needed to restart X. Impressive.)

CC turned on a bunch of debugging and watched some logs and searched

the interwebs, and eventually concluded it was an xfwm4

compositor issue. We tried switching to picom, which seemed

to work, and CC was happy. (My X11 guru friend when I talked to him

about this: "Why do you need a compositor anyway? I never use one." He's

not wrong.)

I wasn't happy. I wanted to fix the compositor bug. I had CC clone

the xfwm4 source code and look around some more. It quickly

(!) zeroed in on a particular few lines of code that did a comparison of

the TV screen size as calculated by Gdk with the screen size as reported

by XRandR. These differed by one pixel, because of rounding of the Gdk

calculation due to my Hidpi 2× setup. Odd vs even. This caused

xfwm4 to report that the fullscreen screensaver window was

obscured, which confused xfce4-screensaver, which refused

to run, since xfce4-screensaver's "don't run screensaver on

fullscreen windows". Or somesuch. I may have the details wrong; check

for yourself.

CC's suggested fix was to allow a "tolerance" of 1 pixel when

comparing things. That was a kludge and I told it so. Once we tested

that the kludge worked, CC proceeded to properly fix the problem, by

avoiding rounding and instead querying XRandR for proper information.

Fix complete, CC wrote an issue report

and PR

and I reviewed them and pushed them to XFCE4's Gitlab.

I found this whole thing pretty remarkable, to be honest. It was

probably five or six hours from start to finish. I hand-edited zero

files, debugged and fixed zero code. CC did the job by itself. I did

make a couple of suggestions, ask a couple of leading questions, and

report what I was seeing several times. That was it.

As someone who has been involved with X11 and Linux almost since

their respective inceptions, I would estimate that it would have taken

me something like a week of hard work to track this down — except I

would have given up way earlier. This was a deep and subtle bug, with no

direct reports in the wild that we could find. It was pretty hairy.

Claude Code just went there and did the thing.

CC has some issues. Its "hooks" safety system is way

over-conservative, asking constant permission for trivial things. I

would have run in a sandbox with hooks disabled, but I needed to be on

my real computer to work on this. I ended up with a bit of "allow"

fatigue.

CC runs out of context memory pretty frequently, and has to save a

summary of the context, restart, and reload the context to continue. The

process is pretty transparent, and I did not see major issues across

these events, but it's a thing.

CC is slower than you think it is. Time flies by as it puts pacifiers

up and thunks for long spans. My X11 human friend would have worked much

faster.

In the end, though, I am more of a CC stan than I started out as.

This thing is powerful, and I'm glad I can afford the

medium-priced account to be able to use it pretty freely. Turns out CC

is great for system tasks, and I have plenty to feed it.

I've posted a session

transcript (see an earlier blog post) for your perusal. Enjoy.

2025-11-15 16:03:50 PST

Bart Massey 2025

When I am finished with a large work session with Claude Code, I want

to have a complete transcript of my conversation with the agent. This is

useful for my review, and even more useful for showing our work to

others.

My initial approach involved adding detailed instructions to

~/.claude/AGENTS.md with the intent of having Claude Code

maintain its own transcripts.

Even after much iteration, this process was pretty much fail. Claude

would forget to keep a transcript, would produce "transcripts"

containing summaries, and would generally struggle with the whole

process. I gave up on Claude as a transcription tool.

I was about to resort to using screen somehow (which I

kind of hate), but I ran a quick Gemini search (LOL) and found out that

Claude Code already keeps a complete JSON transcript of every

session in ~/.claude/projects (!). This is something

Claude Code had failed to disclose after numerous interrogations, and

was extremely heartening.

The Gemini search showed a couple of useful things. First, these

transcipts are apparently auto-deleted

after 30 days (thanks Simon Willison!). The suggested fix is to add

"cleanupPeriodDays: 99999" to

~/.claude/settings.json. Done.

Second, there are existing Python command-line tools to work with the

transcripts. Gemini suggested

claude-conversation-extractor, which contains several small

tools; the blog post above pointed to Simon Willison's own claude_to_markdown.

I installed claude-conversation-extractor, ran

claude-search, and was told it would be good to

$ pip install --user spacy && python -m spacy download en_core_web_sm

to get better search. With or without, the search seemed to work

great.

Extraction during search was annoying, since the

claude-search tool's extraction put the markdown output in

~/Desktop/Claude\ logs; this was hard to find and I don't

use my Desktop directory anyhow. But meh. Might fix and

upstream later. At the very least, --help should be added

to these tools.

Anyhow, now I can get back my Claude Code conversations and have

future ones continue to be recorded automatically. There were some I

really wanted back, so this is not just good but great news.

2025-11-10 21:42:56 PST

Bart Massey 2025

[Updated after a few days with corrected info.]

This is a quick note to future me and others who may be

wishing to use GDB to debug a failing Rust test.

When you run cargo test, Cargo builds a separate test

binary linked against the collection of tests in your source binary or

library, and another test binary for tests in the tests/

directory. It helpfully prints a path in your target/

directory for each test binary it creates. This is your way in.

Let's assume you are working in a crate named my_crate

(look at Cargo.toml for the crate name) with a test named

my_test.

Run cargo test. If you want to work on

my_test(), your best plan is

cargo test my_test

Look at the cargo test output. This will give the

binary path starting with target/ for the test binary Cargo

created.

Be careful: if you are looking at a test in the

tests/ directory there are actually two such

binary paths printed. You want the one for the tests/

tests.

rust-gdb binary-path

Set a breakpoint at the start of your test.

break my_crate::my_test

If you get an error that the function doesn't exist, something has

gone wrong. Check the previous steps.

Tell GDB

run --test my_test

You should now be looking at the start of your test.

Acknowledgements

The information here is largely obtained from this Stack Overflow

answer. Many thanks to Chris Morgan there for reminding me how this

process works.

2025-10-18 01:36:36 PDT

Bart Massey 2025

The Sipeed

M0sense is an interesting little budget microcontroller dev board

that I got from Seeed Studio. I bought six of them for a price that

worked out to about US$7.50 per board with shipping. I was initially

looking for the similarly-priced Sipeed

Longan Nano that I've used many times, but it is discontinued, which

makes sense: the M0sense really is a more capable board.

The shipping time was the usual couple of weeks. The boards arrived

in cute little plastic boxes, with pins included to be soldered onto the

board as desired.

Like the Longan Nano, a glitz feature of the M0sense is its

pre-attached screen. The M0sense screen is connected by a ribbon cable

with a non-stress-relief connector; this is unfortunate for a screen

that flops around mercilessly. Foreshadowing.

The M0sense is well-documented, with both a Wiki

and a quite complete manufacturer

page. Unfortunately, I found these resources pretty late, and was

initially relying on various bits and pieces from the Internet and

AI.

The M0sense powered up nicely, showing a cute demo mode on its

screen. Its sole RGB LED was showing a pretty color. All good.

Initial Boot Attempt

The plan for the M0sense boards was to run Rust code on them. This

meant getting them to flash offboard binaries. As with many modern dev

boards, the M0sense firmware is supposed to provide a USB drive

emulation onto which one can drop binaries to be flashed. This is not my

favorite interface, as it doesn't provide for tracing or debugging, but

it is quite a good starting point.

Unfortunately, my M0sense would not show up on USB at all.

After trying several failing USB-C cables (a standard theme), I took out

Old Faithful and connected the board to my Linux box. Linux tried to

configure/enumerate the device USB and failed. It was spotty to get it

even that far.

Much messing around later, I managed to grab the screen on my board

with my thumb while pulling out the USB connector and, inadvertently,

the display cable. I got the display cable back in (and was proud that I

could do it — tiny fiddly thing) only to find out that I'd cracked the

screen. Good thing I bought five spares. Also, this allowed me to

continue trying to boot the board without worrying about the now-missing

screen. Didn't need it anyway.

There are two little buttons on the board: one labeled BOOT and the

other RESET. The Internets said that the trick to bootloading was to

hold down BOOT while tapping RESET ("like the ESP32", I guess). No luck.

Found in the manufacturer docs where it said to hold BOOT during

power-up. No luck.

Finishing Bootloading

I eventually found the manufacturer docs section that talks about

what to do in my situation. The recommendation was to download

manufacturer bootloader software (which had versions for Windows, Mac

and Linux — good job) and then to jumper the BOOT pin on the

board to the adjacent 3.3V pin while powering up. I couldn't believe

that this was different from holding the BOOT button, so put it off for

a long time. Turns out I was wrong.

Eventually I soldered pins onto my board, scrounged around my work

until I found a jumper (yeesh), and tried it their way. Worked. Sigh.

The M0sense showed up on USB as a drive, with USB information like

this:

Bus 001 Device 058: ID ffff:ffff Bouffalo Bouffalo CDC DEMO

Could be more jank, but not sure how. CDC is surely "USB CDC

(Communications Device Class)", but there is no obvious ACM port.

Probably can mess with Linux udev to change that. Device ID

ffff:ffff is just lazy and janky. "Bouffalo Bouffalo" doesn't even

identify the processor (Bouffalo BL702 RISC-V), much less the board.

But hey, could flash demos from the manufacturer website now. Seemed

to work. Hooray!

Went and got some ½" double-sided foam tape from the local hardware

store: it fit perfectly. I taped down the screen to the top of the board

Longan-Nano-Style — pressing firmly… and cracked the screen again.

Luckily the crack this time was pretty livable. Still, need to be really

careful with delicate screen.

Running Rust?

Haven't got there yet. There's a workable-looking PAC crate and a minimal HAL crate by a known-good

developer. Flashing Rust still seems to be a pain, though.

Having built the HAL blinky.bin demo, I tried flashing

with:

Dropping blinky.bin in the M0sense flash drive. No

result.

The recommended bflb-mcu-tool. Result: a bunch of

Python dependency errors.

jq_flasher.

Couldn't talk to the board correctly, I think.

blflash,

written in Rust! Only supports the BL602.

The manufacturer-provided BLFlashCommand-ubuntu.

Complained about a missing config file even when I thought I'd supplied

one.

The manufacturer-provided BLDevCube-ubuntu, with and

without BOOT pin. Failed during booting.

Probably something else I forgot.

Anyhow, it looks like I have a long road ahead to figure out what's

going on with bootloading on this chip. Seems like it's more complicated

that one would hope. Will post here when I figure stuff out.

Other Limitations

The M0sense physical organization is not ideal. With the screen stuck

to the side of the board opposite the buttons and LED, everything is

good — until you solder pins on. If you solder them so that you can push

the buttons and see the LED, you can no longer see the pin labels. (The

silk for the pin labels should have been on the other side or on both

sides.) At that point there's also no longer anyplace to put the screen,

so it just dangles out onto the breadboard on the same side as the USB

connector.

I'm suspecting that I'll need to JTAG the M0sense eventually.

Fortunately, the Sipeed RV Debugger Plus is a JTAG unit still available

as an open source project: it's on

Amazon right now for US $9.

Status

After about 8 hours with the M0sense, I'm not yet convinced it was a

good buy. But I still have hope. If I can get it to boot anything

written in Rust it will suddenly look like a great deal.

2025-07-20 14:20:21 PDT

Bart Massey 2025

I became aware of the Kelly Criterion

a while back through YouTube or something. It's a really interesting

idea. Imagine that you want to maximize your return over the long term

from independent bets in some game.

For example imagine playing poker with a current stack

of chips. Say that you can make a bet of size

with expected win probability

.

Let the "fractional return" of the bet be

:

that is, you will get

chips for winning the bet.

The Kelly Criterion suggests that you optimize your long-term return

by choosing

If you bet more, you will win too little per win over the long term

due to excess risk of shrinking your bankroll. If you bet less you will

not get the return you "deserve".

There is a fancy proof of optimality of the Kelly Criterion involving

log-likelihood.

I am thinking about the Kelly Criterion and variants in the context

not just of Poker, but of Yahtzee. I will report here if I figure out

anything interesting that is new to me.

There's some math above. Hooray. I have switched my GitAtom blog software

to use Python's pandoc library instead of

cmarkgfm. It still does Github-flavored Markdown, but has

an option to generate MathML. I don't like that Pandoc centers display

math, and I'm not sure the HTML renders as well in Chrome or Safari as

it does in Firefox. That said, I like using math, so I will likely stick

with it for now.

2025-07-16 13:37:46 PDT

Bart Massey 2025

So in the previous post here I upgraded my Debian desktop. One of my

more foolish choices was to finally tackle upgrading Firefox.

Firefox has been stuck on an ancient version because I pinned Debian

to default to testing and the testing version

of Firefox relied on a library from unstable. (At least I

think that's what I remember. If so, it's an obvious Debian packaging

bug. I may also have just held Firefox, as I use it extensively in my

work and it really mustn't fail for me in a critical situation.)

I installed the missing library manually and upgraded to current

testing Firefox. Then the fun started.

My bad workflow, which I am trying consciously to get away from, is

to manage my to-do list by keeping Firefox windows open for each of

item. Since Firefox tends to gracefully recover these windows

on restarts, this works just well enough that it's hard to get away

from. It's fairly convenient, at least.

So I carefully bookmarked every window I didn't want to lose, and

restarted Firefox. The new Firefox came up and did indeed manage to

restore its ancient state. But the UI was pretty broken — even for new

windows.

All new Firefox windows came up with the Bookmarks Manager open. This

rapidly became unusably annoying. Suggestions from the Interwebs solved

nothing.

Worse was the UI scaling, which had become untenable. My vision is

not great, so I need to have the UI font (as opposed to the web page

font) large enough on my big display. (This LG43UK6200PUA TV-as-monitor

is 42.5" 4K — reported by XFCE's Display widget as 75" and by

xrandr as about 11", but that's a story for another day.)

Sadly, everything was out of whack: the UI font was unbelievable tiny.

The Internets could only suggest adjusting Firefox's DPI setting, but

that just produced a whole another set of issues. Icon size to text size

ratios on Firefox have always been terrible: this is especially annoying

for the tab close box, which is a tricky target to hit on this

display.

So… to make a several-hours-long story short, I finally gave up. I

moved my whole ~/.mozilla folder aside and restarted

Firefox. As expected, things worked OK now out of the box. I used

Mozilla Sync to recover most of what I'd lost. The notable exception

was, again as expected, my session: Mozilla Sync claims to save and

restore it, but it just didn't. Good thing I bookmarked everything way

back up there.

Bowser cookies and favicons were also lost in all this, so now I'm

logging into all my sites fresh again. I'm not sure I wanted either of

these synced and restored (especially cookies) but it sure would have

been convenient here.

I'll have to figure out what to do about the alternate Firefox

Profiles I had in my old setup. I am afraid to just move them over, for

pretty obvious reasons. I'll probably just try to recreate the couple I

care about; more adventures await.

As a novice Linux user with no particular bond to Firefox and no

particular experience dealing with its vagaries, the solution would have

been relatively simple: I could have switched back to Chrome, from

whence I came a year or two ago.

The switch to Firefox was motivated primarily by Google's attempt to

restrict ad-blockers: I rely on mine for the web, and honestly would use

a lot less of the web without it. I occasionally encounter the

non-ad-blocked experience elsewhere and am kind of stunned: you have to

literally mine the nuggets of information out of a pile of intrusive and

noxious advertising. How does anyone live with that?

Anyhow, maybe this will help someone, and the whining felt good. I'm

back to a functional place with Firefox, and that's all I needed. I just

wish it had been easy and seamless.

2025-07-15 21:48:42 PDT

Bart Massey 2025

I got a new second monitor for my home desktop today (2025-07-15). My

old second monitor was pretty great, except it was so old it had a

fluorescent backlight. It was only 30", but put off enough heat to toast

bread.

The new monitor was as close to the old as I could buy in terms of

geometry, but I oddly could not find a new 30" 1600p monitor. I bought a

32" 4K monitor. This second monitor sits next to the 72" TV I use as my

primary display. I have always wanted to be able to move windows from

display to display without them changing size (too noticeably). That

meant I got to use display scaling.

Selecting a monitor

Selecting a monitor from the Internet is harder than it should be.

The key problem for me was that I wanted a monitor about the display

same height as the old one. The old one was something like 17", so I

went looking for a 17"-high monitor.

No one will tell you the height and width of the display for the

monitor they are trying to sell you. No, no. You get the diagonal

size (in freedom units) and the aspect ratio (a

dimensionless ratio). Clearly these are the important parameters that

normal people want to know.

Anyhow, https://github.com/BartMassey/monitor-dims

encapsulates the math.

Upgrading Debian

Sadly, the new monitor meant it was time for the dreaded home desktop

Debian upgrade. I mean, not really, but as I suspected it turned out I

had to log out and back in anyway: the combination of X11 and Nvidia in

a dual-monitor situation always gets the display pretty messed up when

configuring, so logouts are necessary. This is a good time for me to do

the Debian upgrades I've been putting off for months because everything

was just working, within reason.

Fixing sources.list.d

For a while now, apt has been whining at me with "You

need to update your /etc/apt/sources.list.d real bad!

There's bad keys, and besides, there's a whole archive description file

format you need to migrate to! It's important!"

Ok. So let's do this too while we're at it.

After making appropriate backup copies of stuff, I ran

apt modernize-sources. Cool.

That command was not smart enough to migrate the

arch field of a archive listing:

deb [arch=amd64] http://dl.google.com/linux/chrome/deb/ stable main

for example just lost the arch part. Then

apt complained at me that the Chrome archive didn't have

x86 support. I know. But I'm on a dual-architecture

platform for gaming purposes.

Much research and agony later I solved this by manually

adding

Architectures: amd64

to every single .sources file. Note the slight but very

important difference between Architecture: (silently

ignored) and Architectures: works fine.

The problem of "bad" archive signing keys had been around a

while. For Zulip Desktop, for example, apt update --audit

gave the ominous

Warning: https://download.zulip.com/desktop/apt/dists/stable/InRelease: Policy will reject signature within a year, see --audit for details

Audit: https://download.zulip.com/desktop/apt/dists/stable/InRelease:

Sub-process /usr/bin/sqv returned an error code (1), error message is:

Signing key on 69AD12704E71A4803DCA3A682424BE5AE9BD10D9 is not bound: No

binding signature at time 2025-03-13T21:39:32Z because: Policy rejected

non-revocation signature (PositiveCertification) requiring second

pre-image resistance because: SHA1 is not considered secure since

2026-02-01T00:00:00Z

"Since 2026-02-01." Nice. Anyhoo, except for Zulip Desktop

(filed Issue #1437) I

managed to track down proper keys for everything I needed.

Turns out you're supposed to put the signing keys in

/etc/apt/keyrings and reference them there with a

Signed-By header — unless the signing key is a "system key"

and needs to go somewhere else. The initial

apt modernize-sources had done stuff, so it was not too

brutal to figure out. Still…

Dealing With Linux Nvidia —

Again

This one is always comical. This time, I managed to get the Debian

login prompt to come up on my freshly-booted primary screen. But only

the primary screen. The new screen was ignored.

VT-switching to the console showed that the new screen was working

fine. It just didn't light up under X11.

"No problem," I naïvely thought. "I'll just log into my account and

use the Nvidia display tool to fix it. Or xrandr. Something

will work."

On login the screen froze so hard I gave up and rebooted my

machine.

…

Let's just skip the silly debugging session. Bottom line: everything

works perfectly at this point — as long as I turn off my new

secondary monitor before I log in, and then turn it back on. Srsly. This

is reproducible, and I have only the vaguest guesses why/how this is a

thing.

Epilogue

I burned down about five hours on this — not counting things like

unpacking my new monitor and finding an old adjustable stand to use with

it, and then putting the stand from my soon-to-be-discarded

thermal-generator/monitor onto the monitor I borrowed the stand

from.

The good news is that I am now ready to put off upgrading Debian for

another month, and getting a new monitor for another 10 or 15 years. I

wonder why I do that?

2025-02-03 18:51:54 PST

Bart Massey 2025

I've given an assignment in a couple of Rust classes I've taught

recently involving Cellular

Automaton (CA) "Rule 110". The latest

version of the assignment is at the end of this post — please take a

second to read it, noting especially the transition table there.

Implementing Rule 110

The obvious way to generate a next row using Rule 110 is to walk over

the current row one bit at a time, take the "left" (L), "center" (C) and

"right" (R) bit relative to the current position, and use Rule 110 to

compute an output bit. Note that the output bit must be placed in a new

row, to avoid corrupting the row computation.

This algorithm allows various implementations of the row datatype. A

good starting place in a language with a Boolean type and sized arrays —

Rust — is to use an array of Booleans as the row type. In Rust this

looks like [bool;8] and occupies one byte per boolean. The

result is pretty inefficient but easy to work with: for example, unlike

in C Rust array assignment and parameter passing is by value (copied)

instead of by reference.

Once you've fixed a representation of the row, and of the LCR values

— in Rust, just [bool; 3] — you can write a function that,

given an LCR, returns the correct Boolean; that function finishes the

algorithm. There are many ways to accomplish this: a simple

match (like C switch) statement is probably as

easy in Rust as anything. The Rust compiler will even notice and report

errors like incomplete or overlapping matches, which is nice for

catching copy-paste bugs.

Going Bitwise

An obvious next step is to improve the performance of everything by

changing the data representation. Rows of length up to 64 can

conveniently be represented as a simple unsigned integer:

u64 in Rust, uint64_t in modern C. (Rust also

supports u128, but since that is not a native type in most

CPUs, more than 64 bits per row might indicate time to just switch to a

full-on bit-vector package.)

Now some shifting and masking can get the LCR bits at each position

into the low-order position, at which point the Rule 110 calculation can

just take an integer in the range [0..7] to a Boolean.

Indeed, this works for any CA rule: my code contains this function

fn by_rule(rule: u8, bits: u64) -> u64 {

((rule >> (bits & 0x7) as u32) & 1) as u64

}

(Excuse the gross as casts in the Rust code. Rust does

no automatic numeric promotion, so getting the types right is down to

casting without screwing up: as casts cannot panic, so will

do weird (deterministic) things if you get them wrong. Not a great

language feature, honestly.)

This optimization provides a substantial speedup in my application,

but it still leaves a bunch on the table. For "short" rows like this, we

should be able to get bitwise parallelism if we are careful.

However, the only way I see to do this is rule-specific: we can

hard-wire a Boolean computation using the structure of (for example)

Rule 110 that produces the right answer. Let's do that.

Optimizing Boolean Functions

Rule 110 is defined by a Boolean truth table. These are familiar

objects to Computer Scientists and Electrical Engineers. Let's write

down the actual table, but upside down.

LCR Q

111 0

110 1

101 1

100 0

011 1

010 1

001 1

000 0

The reason for the name "Rule 110" is thus made apparent: reading

Q from top to bottom as a binary number yields decimal 110.

(You can tell that rule name inventor Stephen Wolfram is not a "real"

computer person. The rule numbering should have been hex — 67 here — for

ease of use.)

Those who recall days gone by or work with this stuff regularly may

see that there's an obvious Boolean expression here that will produce

the right answer. Let's let the negation of a positive literal be its

lowercase: for example, "not L" is "l". Then

the "sum of products" (SOP) expression for Q is

LCr + LcR + lCR + lCr + lcR

where plus is bitwise "or" and adjacency is bitwise "and".

This is a fairly expensive expression to compute, so we'd like to see

if we can simplify it to something cheaper that gives the same

answer.

Boolean Algebra

One way is just using Boolean algebra: we can easily factor

L and l to get

L(Cr + cR) + l(CR + Cr + cR)

The extremely clever will note that we can then use Boolean "xor",

which we will denote with "^" as per horrible C (and Rust) convention.

(Why horrible? Because people confuse it with exponentiation all the

time, and then the typechecker passes it, and then the bad thing

happens.)

L(C ^ R) + l(CR + (C ^ R))

The next steps go as follows

L(C ^ R) + lCR + l(C ^ R)

(L + l)(C ^ R) + lCR

(C ^ R) + lCR

This is a boolean expression we can compute fairly cheaply: it

requires an "xor", an "or", two "and"s and a "not" for a total of 5

operations.

Karnaugh Maps

By using Boolean algebra we got from

LCr + LcR + lCR + lCr + lcR

to

(C ^ R) + lCR

which is quite an improvement. But it was also a fairly involved

process with many fiddly steps to get wrong.

The Karnaugh

Map is a well-known device in Electrical Engineering, but maybe less

well-known in Computer Science: a graphical method of quickly

simplifying a Boolean expression.

(Here we will work with our specific 3-literal SOP expression.

Karnaugh Maps can be made for any number of literals, but they get hard

to reason with as the number of literals becomes large. They also can be

used to simplify product-of-sums (POS) expressions.)

The Karnaugh Map for our initial expression might look like this:

CR Cr cR cr

L 0 1 1 0

l 1 1 1 0

We have done the ordering "backward" because the original truth table

is backward: this is not normal, but it doesn't matter to the method. We

have also chosen to group C and R: any grouping will work, although

changing the representation may make salient features more or less

graphically obvious.

Now, the heart of the Karnaugh Map method is to try to create large

rectangles that cover all-ones areas. Each rectangle corresponds to a

min-term in the simplified SOP formula.

Let's start with the large rectangle in the center, starring each

element.

CR Cr cR cr

L 0 *1 *1 0

l 1 *1 *1 0

This could give us two min-terms either vertically or horizontally.

However, the graphical view gives us an easy way to spot what we spotted

before: we will add the combined term C ^ R. Now we have

covered all the one-bits but the lower-left one: we will cover that with

a second 1×1 rectangle, getting

CR Cr cR cr

L 0 1 1 0

l *1 1 1 0

This gives us a second min-term lCR, so our combined

expression is as before.

(C ^ R) + lCR

That was way easier. Our expression still has a cost of 5

operations.

But wait — there's more! In that last step we violated our rule of

making big rectangles. Nothing says the rectangles can't overlap. Making

the 1×3 rectangle

CR Cr cR cr

L 0 1 1 0

l *1 *1 *1 0

gives us l(C + cR) which isn't as cheap as before. (In

general, crossing even boundaries is expensive.) But making the 1×2

rectangle

CR Cr cR cr

L 0 1 1 0

l *1 *1 1 0

gives us lC which is a 20% savings! Our expression costs

4 now:

(C ^ R) + lC

This seems counterintuitive, so we should probably check it with a

truth table.

LCR C^R lC Q

111 0 0 0

110 1 0 1

101 1 0 1

100 0 0 0

011 0 1 1

010 1 1 1

001 1 0 1

000 0 0 0

Huh. Works perfectly.

Could we do better? Notice that Q has more 1 bits than 0

bits. Maybe we should pay for a negation and work with the map for

q?

CR Cr cR cr

L 1 0 0 1

l 0 0 0 1

This leads to q as

cr + LCR

This has 7 operations counting the invisible negation, so not as

good.

Since we need Q we could try to deMorgan-ize and factor

to get

!(cr) !(LCR)

(C + R) (l + c + r)

(C + R) (l + c + r)

Cl + Cr + lR + cR

l(C + R) + (C ^ R)

5 operations, but we ended doing some fiddly Boolean algebra again.

Not an improvement.

Bitwise Parallelism

Now we have a really nice Boolean formula for Rule 110. Yay. Remember

that we started with a really nice formula generalized over all rule

numbers. What does the specialization buy us?

Well, now we can use "bitwise parallelism" to generate all the bits

of the next row at once. Notice that R[i] is just

(C << 1)[i] and L[i] is

(C >> 1)[i]. Soo…

let left = cur >> 1;

let right = cur << 1;

let next = (cur ^ right) | (!left & cur);

Note that Rust uses ! for bitwise negation of integer

types. Wouldn't have been my choice, but it's fine.

We've computed a whole next row of up to 64 bits at once. This is

nice. Really nice.

There's only one remaining problem. The end positions will not be

computed correctly. Right now we're shifting 0 into the

right value, and whatever was lying around in the

next-upper bit into the left. Then we're negating

left without consideration of how many bits actually need

to flip, essentially trashing the left bit for the next iteration.

Wrapping Around

Recall that we are to "wrap around" at the ends. Let's add a little

masking and rotate action. Let nbits be the number of

least-significant bits that are supposed to be part of the row. We can

rotate right by shifting right and moving the left bit back around.

Similarly, we can rotate left by shifting left and moving the right bit

back around. The mask at the end ensures that there's a 0 bit left of

the significant bits on the next cycle.

let left = (cur >> 1) | (cur << (nbits - 1));

let right = (cur << 1) | (cur >> (nbits - 1));

let mask = (1u64 << nbits).wrapping_sub(1);

let next = ((cur ^ right) | (!left & cur)) & mask;

The Rust compiler, when run in the default debug mode, inserts

integer wrap assertions in the code. (Honestly, these should be on by

default in optimized "release" compiles as well. Sigh.) The

wrapping_sub() disables the wrap check, important when

nbits == 64.

With the code above we can apparently produce a new row of up to 64

bits in 15 cycles. Of course, that's a really gross approximation to

real performance. The calculation assumes that everything can be in

64-bit registers all the time — not unreasonable on a modern "big" CPU.

The calculation also doesn't take into account cycle times: the integer

operations may issue at two or more per cycle depending on… things. But

still…

Testing

We have done a lot to get to this point. We should probably start by

comparing with a known-good implementation to make sure that our fiddly

bit-wiggling works. I'll check the first 10 rows of the 8-cell table

from our assignment — that should be good enough. (Remember the

assignment? Seems like a long time since we started there.) One moment,

please.

Ok, full disclosure. The version of the row operation given above is

not what I started with. I made one masking bug and missed one

optimization in the first version. This is why we test: the core idea

was sound, but my code was not. Fixed.

The whole point of all this fiddliness was, in some sense to get a

really fast implementation of Rule 110. Let's see how we did.

The results? The old method (already faster) makes 100M rows in about

3.36s. The new method makes 100M rows in 0.15s. Roughly a 22× speedup.

1.46s for 1B rows, about 685M rows per second on a single core of my

2.2GHz machine.

But wait — there's one more set of tricks to apply. I can set up

rustc to compile for my real "modern" CPU (AMD Ryzen 9

3900X) instead of some least-common-denominator x86_64. I

can also turn all the rustc optimizations I know about up

to 11. The result? Got 1B rows in 1.42s! Yeah, compiler don't care.

After applying the same optimizations to the previous version, I

found 3.07s and 0.14s for 100M rows, respectively — our speedup is about

the same. The compiler can do a lot more with the "slow" version,

apparently, because there's more code there to work with.

One final idea: this benchmark is somewhat specific. The row width

shouldn't matter much to the fast version, but it matters a lot

to the old version. I chose 8 bits just because that is what we started

with. Let's try a couple more widths on 100M rows just to verify a bunch

of assumptions. With width 1, I get 0.75s and 0.14s respectively. Still

a respectable 5× speedup in the worst ("real") case. With width 64, I

get 21.9s and 0.14s. That's kind of a big deal.

We have won.

Future Work

Battles don't stay won. There's things we could do to be stronger,

faster, better than before.

Bit parallelism is the best parallelism. If you want to go wider

than 64-bit rows, though, you'll probably want to investigate the other

kind. The trick here, of course, is the boundaries: some communication

between cores is needed on each cycle. This can slow you down massively.

However, because end corruption can only travel inward at one bit per

row, you can do some batching and fix things up later. It all gets

fiddly and really hard to make fast.

The manual process we went through to get the Rule 110 map seems

automatable. Some kind of nice Boolean formula optimizer seems in order.

Since there are only 256 rules (some of which are utterly boring) we

could pre-compute a Boolean formula for each and hard-code it into a

table. Alternatively, we could find some reasonably fast optimizer and

do this on the fly. We really should handle arbitrary rules.

Conclusions

I set out to write a short little thing to explain to those

interested what I was doing today. I would estimate that I ended up

spending about four hours writing and about two hours actually doing the

thing. This is not an unusual ratio for me, and I think for others.

The good news is that I ended up with some interesting insights for

myself (even on this well-traveled road). Hopefully you learned a thing

or two as well.

Acknowledgments

CA Rule 110 was first introduced to me in Fall 2008 when I was

teaching a Multicore Computing class. (I ended up losing to only a

couple of students from the class of 20 or so, pitting some forgotten

variant of my single-threaded implementation described in this article

against their 16-core things.) Thanks much to the student who brought

this problem to the class.

Keith Packard and others taught me the art of bit-banging, long ago.

Thanks for that! Also thanks to Hank Warren's Hacker's Delight,

the one true bit-parallel book.

Availability

The Rust source code for everything here is available under an

open-source license at https://github.com/pdx-cs-rust/hw-rule110-solution.

Please see the README there for details.

Appendix: Rule 110 Assignment

Bart Massey 2025

Background

In this exercise you will create an ASCII rendering of the Rule 110 Elementary

Cellular Automaton (CA). Not as scary as it sounds, and a nice

simple Rust thing to play with.

A CA starts with a random row of bits. We will represent 1 bits with

* and 0 bits with .. Our starting row will

specifically be this 8-bit position for now.

*.*..*..

To produce the next row, take bits three at a time, "wrapping around"

if a boundary is hit (row 7 is next to row 0 and so forth). A group of

three bits in row n will determine the center bit position in

row n + 1 according to Rule 110:

111 → 0

110 → 1

101 → 1

100 → 0

011 → 1

010 → 1

001 → 1

000 → 0

So for our start, the first two rows will be

*.*..*..

***.**.*

(Notice the wraparound at the beginning and end.)

The Program

Write a program that prints ten rows starting with the two given.

Challenges

(Challenges are not required, but give

a chance to do something extra. Turn them in and write them up in the

README.)

Make your program take a command-line argument for the starting

row.

Time your program. See how fast you can make it go. Compare

performance with a C or C++ implementation. You will probably have to

modify the program to print a lot more rows: maybe some number

given by a command-line argument.

2024-12-30 01:19:18 PST

Bart Massey 2024

I spent tonight continuing to work on moving my web content to my

cloud server. This particular task was bittersweet at best.

My beloved brother Byron passed

away in 2010. I miss him constantly.

Byron's website was at http://nealhere.com. I let the

domain name go, but kept the website alive in his memory at http://nealhere.po8.org. Take a look:

there's a lot of cool old stuff there.

The move was complicated because Byron had built

nealhere using a now-ancient version of the excellent

blosxom CGI static site generator. nealhere

also used server-side includes and .htaccess. So I figured

out how to make all these things work with my new nginx

installation.

blosxom needed CGI.pm to function.

apt install libcgi-pm-perl.

blosxom needed plain old CGI. Unfortunately, "slow"

CGI is long gone, and the wrapping needed to make things work with

FastCGI took some discovery. apt install fcgiwrap, then

look at the nginx example under

/usr/share/doc/fcgiwrap to get some setup hints.

Byron's setup used .htaccess to do rewrites. Found

out how to do this with nginx rewrite rules.

Unfortunately, the cursed Zulip installation on the cloud server

had set the running userid of nginx to zulip.

This caused the fcgiwrap socket to be inaccessible. It's

also wrong and gross. I edited the fcgiwrap.socket config

file for systemd for now.

Everything is hard and I'm tired. I'm regretting not running Zulip in

a VM now. It clearly does not play nice with others.

I miss you, Byron.

2024-12-29 23:32:45 PST

Bart Massey 2024

In concert with the move I reported in my previous blog entry, I

decided to move my RSS feed reader Miniflux to my cloud server. That is the

logical place for it, I think.

I spent six or eight hours trying, and gave up.

Miniflux is a server that polls RSS (and/or Atom) feeds regularly and

provides a web interface to browse them. I like Miniflux's functionality

very much: it became my RSS solution sometime after Google bailed

on Google Reader in 2013. I would love to think that Google killed it

because it was too hard to maintain, and with the hope that others would

take up the slack. The cynic in me, though, thinks that Google killed

Reader because it's really hard to generate advertising dollars or

gather much interesting personal information with an RSS platform, even

one you control.

Anyhow, Miniflux is written in Go, which seems fine for this. It also

uses Postgres for its data store. I am a big fan of Postgres in the

abstract: it is the only truly open-source database I am aware of that

is usable for "big" professional-grade deployments.

Sadly, I am less of a fan of Postgres in the concrete these days. It

has become really "enterprisey", and doesn't seem to have much

innovation or support for "normal" use cases. The big problem with

Postgres — the awful, awful problem that has kept MySQL alive and made

SQLite the correct solution for this kind of deployment — is its

horrible authentication and security setup story. I have watched

students and open source developers struggle here, and ultimately admit

defeat. Heck, I have done it myself.

I don't have the energy to go into the Postgres setup and

administration story in detail. Here's a few of the commands I typed at

Postgres while trying to dump the miniflux database on my old server and

then restore it on my new server. I don't know exactly what these do, so

don't use them unless you know what you are doing. They didn't work for

me much anyhow, but I put them here to give an idea and because I may

want them later. Notes, in other words.

ALTER DATABASE postgres REFRESH COLLATION VERSION;

CREATE USER miniflux WITH PASSWORD 'xxxxxxxx';

CREATE DATABASE miniflux WITH OWNER = miniflux;

GRANT ALL PRIVILEGES ON DATABASE miniflux TO miniflux;

\c miniflux

GRANT ALL PRIVILEGES ON SCHEMA public TO miniflux;

Eventually, after exploring and learning a lot, I got my Miniflux

installation running on my cloud server. It seemed to be working

perfectly, so I patted myself on the back and went to bed.

In the morning, it developed that the feed sync couldn't update the

database. I kept getting a cryptic error about "schema zulip". Something

in the Zulip Postgres installation, I eventually concluded, was keeping

the Miniflux Postgres installation from working. I tried to debug it for

quite a while, but eventually concluded it was beyond me. I filed an issue asking for

help from Miniflux (maybe should have tried Zulip as well) and moved

back to accessing Miniflux on my own server for now.

I clearly have too much infrastructure in play. I need to get it down

somehow. Ah well. Will let you know if anything develops: maybe you'll

see the post via my Atom feed.

2024-12-29 02:19:25 PST

Bart Massey 2024

I've retired the main server that acts as the DMZ box for my home

network. The machine now called bartgone served me

faithfully for so many years that I have no idea how old it actually is,

but its time has come.

Hmm. Let's see how old bartgone might be.

- Intel "Core2 6400" @ 2.13GHz (probably Conroe Core 2 Duo E6400,

2006)

- 4GB RAM

- Intel DG965W Motherboard (2006)

So, best guess is late 2006 sometime. That makes

bartgone about 19 years old. During that time, it has run

24/7/365 faithfully and well, routing packets and providing services to

my home net and the internets.

I've replaced bartgone with bartlet, a GMK

NucBox M6 mini-PC. I bought it cheap a couple of months ago.

- AMD Ryzen 5 6600H 12-core

- 16GB RAM

- Dual ethernet onboard

So far looks like it's going to be a fantastic replacement. May it

last me another 20 years.

2024-12-17 21:34:55 PST

Bart Massey 2024

Thought I'd do a quick post-mortem (damn near, anyway) on my big

adventure of the last few hours. It involved reconfiguring a Zulip server I run, and was supposed to be

a quick thing. But Zulip is never quick.

Zulip has an interesting configuration option for allowing multiple

Zulip chat servers on a single host server. They call this "realms" for

some reason. By default you only get the one default realm on your

server, so that's what I got when I very quickly set mine up a couple of

years ago.

I now wanted to reconfigure to allow multiple realms:

https://site1.zulip.example.com (for example), and

https://site2.zulip.example.com instead of just

https://zulip.example.com on my cloud server.

Thus the fun began…

DNS

So the first thing was to get the domain names set up. I run the DNS

for example.com. I am serving it with bind9 or

bind or named — all different names for the

same piece of software in use on my home Linux server, depending on

context. It turns out that systemctl restart named and

systemctl restart bind9 are just aliases of each other.

Which is weird.

I've spent a lot of time in /etc/bind configuring this

thing, so I wasn't anticipating any big deal. I slapped

site1.zulip.example.com as an A record in the zone table

and… nope.

A half-hour of flailing later I called a friend who is both generous

with his time and a genius. He too was confused. The thing we both

thought should work, and the internets thought should work, didn't

work.

Skipping a bunch more flailing, the desired result was achieved by

adding a new zone for zulip.example.com in the zone file

for example.com (as zone master, backed up to my friend as

zone, er, alternate). With the NS and CNAME records filled in just

right, it all just worked.

Upgrading Zulip

Before I tried to do anything with Zulip, I figured I should upgrade

first, because it was time anyway and I'd be working from a stable base.

Sadly, Zulip is not packaged for Debian as far as I can tell, so I had

to download a big tarball and have some script from the existing Zulip

installation run the upgrade.

The Zulip install script refused, because "unsupported Debian

version". Much digging around later, it turns out my cloud server

provider, who had graciously installed Debian for me, had done something

that altered both /etc/debian_version and

/etc/os-release to say I was running

trixie/sid. Some careful hand-editing of these files got me

back to where the Zulip script was willing to admit that I had an OS

they supported and install the software.

There was one other quirk: the installer wanted libvips,

but Debian had only libvips42. Huh. So I broke down the

upgrade tarball, hand-edited the dependency, and then rebuilt the

tarball and gave it to the installer again. Success.

Move The Existing Zulip

I then wanted to move the existing Zulip from

zulip.example.com to site1.zulip.example.com.

I used the Zulip backup script (wouldn't work earlier because of the

version thing) to back the existing Zulip up, then just used another

Zulip script to move the thing. Just worked, which surprised me.

Deal With Nginx and

Certificates

Of course, everything has to be TLS now. So I ran another

Zulip script which ran certbot to get a new TLS certificate

for site1.zulip.example.com. (Given the amount of Zulip

instances I ever expect to run, getting a wildcard cert seemed like

excessive effort.)

I then confronted a couple of sad realities: nothing was working, and

nginx configuration was the problem. I have been using

Apache since it came out, and I am just not that comfortable with

nginx. However, it was on this server because reasons and

seemed hard to replace, so I buckled down and started to patch up the

config.

One issue was another service running on my cloud box, "Punchy".

Punchy had its nginx config installed in

/etc/nginx/conf.d and really wanted to be in charge of the

TLS for everybody. I finally dpkg-diverted it to

sites-available where it should have been in the first

place.

The key finding of this phase was that every

server section needed to have a server_name

set. Anything that didn't just kind of took over everything else.

Finally sorted that all out.

One Last Zulip Config

At this point, I had my Zulip desktop client talking successfully to

site.zulip.example.com. Hooray.

Unfortunately, browser access not so much. The browser took a login,

but then just hung spinning, with a message that said "if this doesn't

come back in a few seconds try reloading the page". Needless to say, a

reload solved nothing.

Much adventures later, I got out the browser developer

tools, which reported that Zulip was still trying (and failing) to talk

to zulip.example.com. I then discovered

/etc/zulip/config.py, which had

zulip.example.com set as primary, and no entry in the

alternate hostname for site1.zulip.example.com. I added the

latter, and then altered the nginx configuration to allow

the former.

Conclusions and Future Work

Hooray. I'm back to where I started. Except now I'm running Zulip the

way I wanted to, and also now I've fixed the Punchy config and also have

figured out how to do a static site for my cloud server using

nginx. Way too many hours, but a moderate success.

In digging through Zulip stuff I noticed that it may support Github

and Google for auth now. I need to look into this: it's way more

convenient.

Now if Zulip would fix alerts on mobile it might become actually

usable for people. Hooray.

2024-12-08 00:11:24 PST

Bart Massey 2024

Just got a Lenovo X1 Carbon Gen 13 Aura Edition (yes, its real name)

running Windows 11. I was setting up to dual-boot Lenovo-endorsed Ubuntu

Wayland, and started this notebook to document the process.

The goal of this notebook is several-fold:

Remind future me what I did. ("'Future Me.' I hate that guy. He

knows what he did." —Julian Kongslie)

Give others a guide with some pitfalls pointed out and

navigated

Give feedback to the Ubuntu folks.

Background

It may be helpful to know where I'm coming from.

I started using 2.9BSD UNIX on a PDP-11 in 1982, and became a paid

sysadmin and later consultant and teacher of UNIX stuff. I started with

Linux around 1.2.13, using whatever "distros" existed then. Later, I was

a paid admin for a network of Red Hat boxes, then switched to Debian at

slink. I've been using Debian continuously since then. I

have small patches in the Linux kernel. I was one of the founders of

Linux Plumber's Conference.

What I'm saying is that I'm old enough and arrogant enough that the

ridiculous mess that follows is something I can't imagine a new Linux

user wading through on their own. In my opinion it was utterly

irresponsible of Lenovo to claim they supported Ubuntu on the Carbon Gen

13 when it worked like this.

This is my first serious messing-about with Ubuntu. So

that's on me.

2024-12-04

Prepping For Linux

Previously on this machine, I had installed Debian Sid. Gave it up,

as X11 seemed not to be available and I didn't want to figure out Debian

Wayland. In the process I had pre-done a few things to prep for

Linux:

Modified Secure Boot: As shipped, Secure Boot would not

allow booting non-Microsoft OSes. Turned on laptop, got into BIOS

(repeatedly push F1 during boot) and found the setting for Secure Boot

and modified it to allow Third-Party CA Certificates.

On the next Windows boot, this made me supply my BitLocker key, since

the Secure Boot setting had changed. Did so without incident. I had no

idea the Windows 11 install had shipped with BitLocker.

Disabled Bitlocker: A partition with BitLocker enabled

cannot be resized by any means. However, needed to split the main 2TB

partition between Windows and Linux. So found instructions for turning

off BitLocker on the Windows partition. Should have done this before

modifying Secure Boot.

Shrank Windows Partition: From the Debian installer,

shrank the Windows partition to 500GB (should be plenty since I rarely

use Windows for anything). I then let the installer use its default

partitioning scheme on the resulting free space. Little did I know that

I would reclaim this free space and start over later with

Ubuntu.

The total time for this step was probably around an hour. It would be

much faster if repeated.

Installing Ubuntu

Now I was ready to start over with Ubuntu. Some of these steps were

actually duplicates of Debian install things, but we'll pretend that

they were all new.

Configured DNS: Set up bartcarbon13.po8.org

with IP address 192.168.1.13 using my local DNS

infrastructure.

Configured DHCP: Set up my local DHCP infrastructure to

recognize my machine with dhcp-client-identifier

bartcarbon13. This was necessary since the ethernet address

would be that of an adapter rather than the machine itself.

Built Ubuntu USB bootstick: Grabbed an old 8GB USB flash

drive and copied a redundantly-named Ubuntu

plucky-mini-iso-amd64.iso onto the base drive device (not a

partition). Was extremely careful to check the device with

parted right before the copy: easy mistake to copy your

boot iso onto one of your actual hard drives, leading directly to

backup-recovery-town.

Booted from USB: Turned on the laptop and got into the

boot menu (repeatedly hit F12 during boot). Selected booting from USB.

Was confronted with micro-text menu due to HIDPI display. Got my

super-magnifying-glasses on.

Tried to start install: Turns out the micro-menu just

said "Choose an Ubuntu Distribution To Install". Why is this a menu

item?

Next micro-menu offered me a variety of options, none of which was

the "Plucky" the mini-iso said it would install for me. I wouldn't mind

an earlier Ubuntu, except I doubt it would support the Intel ARC

Graphics on my laptop.

Folks say installing Ubuntu is easier than installing Debian. Don't

think they've tried recently.

Tried installing again: Fetched a daily-build

plucky-desktop-amd64.iso after a 5-minute download. 5.4GB

should fit on my 8GB USB stick, but not with miles to spare for sure.

Dumped the ISO onto my USB drive in another 18 minutes — that's a very

slow drive and a big image.

This time was presented with a four-item micro-menu. While trying to

figure out which option to select, the bootloader timed out and started

the default "Install Ubuntu." Apparently I was going with that.

After a couple of minutes of staring at a splash screen, I got an

Ubuntu desktop screen. A minute later Ubuntu was more-or-less up,

apparently running as a Live Image off the really slow USB drive. Not

ideal, but I decided I could try working with it.

Tried an actual install: Noticed "Install Ubuntu 25.04"

in the lower-right corner of the desktop, and decided to click on that

once the handy USB drive activity light mostly quit flashing. The only

effect seemed to be refreshing the list of volumes in the hotbar.

After a while I started clicking on things and eventually ended up

stuck in an application search screen. I apparently had windows open

according to the hotbar, but had no idea how to get to them. A quick

Google search of "ubuntu return to desktop from app search screen" gave

as its top hit "Super+D or Ctrl+Alt+D: Show desktop". Nope. Found a

System 76 tutorial on Ubuntu Basics, which was helpful. Still couldn't

get anywhere.

Finally gave up and rebooted.

Tried installing again: This time chose "Ubuntu (safe

graphics)" from the boot menu, because I knew what was coming. Figured

maybe there were graphics issues with the ARC stuff that weren't yet

sorted on the Live CD. Waited again for a couple of minutes for things

to come up.

This time I would be careful not to search for applications.

Got a window that said "Preparing Ubuntu" this time! Seemed more

promising. I suspect graphics were just borked on the previous attempt.

Frickin' bespoke hardware.

Continued installing: OK! Got the expected installation

prompts. Apparently graphics were borked after all.

Everything was still micro-text, of course. Got out my heavy

magnifying glasses again. Then switched to my Optivisor™ because the

focal length of the glasses was like two inches and I wanted my face out

of the screen.

But hey, on the second screen of the install I was offered the

accessbility option of "Desktop Zoom". Perfect! But it turned out to be

pan-and-zoom. Nope. Settled for "Large Text" and continued

magnification, with the plan of fixing later.

Worked through the Ubuntu installer: Other than nice

graphics, the screens and choices seemed to be pretty similar to the

standard Debian installer.

Realized in here somewhere that I probably wanted to set up an Ubuntu

mirror at home. Sigh.

Changed disk partitions: Oh! The installer offered to

keep my old Debian installation and install Ubuntu as a third way for

now. Tough choice. I could always delete the Debian partition later if I

didn't miss it. But I couldn't imagine why I wouldn't miss it, and it

would be easier now.

I decided to suck it up, take "Manual Installation" and reuse the

Debian partition for Ubuntu. The partition editor was pretty

Debian-standard, but was not interested in letting me erase the Debian

partition. I didn't know what would happen, but decided to plunge

on.

Continued installation: Having the timezone

configuration auto-locate me, presumably by IP, was a nice touch: even

Windows didn't do that.

Huh. The "Review Your Choices" panel pointed out that I had forgotten

to go back and mark the swap partition as "swap" instead of "leave as

swap". That's gross, but OK. Unfortunately the partition editor then

made me reset everything else about the configuration, including stuff

it had initially auto-guessed. Ah well. I'm kind of used to using a swap

file instead of a swap partition for flexibility, but this was chosen

for me by the Debian install and I decided to go along.

Installed installation: I pressed the "Install"ifier.

Started a copy of files off the USB stick. Wasn't sure this was faster

than from the Internet, but hey. Started seeing a series of

post-purchase ad screens, including "Great for Gaming", which, well,

yeah but also had Windows on the box so…

Rebooted from SSD: Ubuntu came up as the first option in

the micro-menu, so I took it. Looked like Windows was there also though,

as expected. Logged in and there it was: success! Went through a brief

post-installation dialog and was looking at a running system.

The total time for this step was about four hours. If repeated, it

would probably still be about two: there was a lot of sit-and-wait, and

a lot of menus to wade through.

Configuring Linux

The fun doesn't stop when the desktop boots. Oh no.

I needed to configure my machine for my use cases. How hard could it

be?

Installed a terminal: The startup had pointed out the

"App Center" icon in the hotbar. I opened it. Took me a moment to

realize that it was locked to "snap packages". Ugh. Switched to "Debian

packages". Nothing under "terminal". Huh.

Found the "Show Apps" start menu thingy. Found a "Terminal" and

pinned it to the hotbar "dock". Apparently it's called a

dock.

Ah! The battery-looking bar icon in the upper-left corner took me